Technical Report Sample

This is a technical report sample. Here is the details report on a deep learning-based project collected from https://github.com. This is not the main project but it is a standard template for showing your project report. You can edit as you want it. Thank you.

Technical Report Sample on ANN:

Abstract

With the help of sensor data collected from a mobile or other devices, a neural network can guess patterns on the data and predict any activity anyone done. Today, it is one of the most demanding sites of artificial intelligence. Moreover, collecting human activity data through sensors, one can implement it to a bot. Raw data collected from different sensors of a mobile, smartwatch and other sensors active device enhance the classification of human activities with machine learning algorithms. In this paper, I observed a model on its mile activity recognition, usually the motion sensors, such as accelerometer and gyroscope. The performance of the model is great; however, I have tried to tune the model and observed the changes one by one. Hence, the model validation accuracy is 97.98 % and precision is 95%. This was achieved from the data learning from a cellphone attached to the waist. LSTM model has been used for this purpose to learn the sequence of data.

Table of Contents

Abstract

Table of Contents

List of Figures

1 Introduction

2 Background

3 Datasets

4 Methodology

5 Results and discussion

6 Conclusions and recommendations

7 Acknowledgments

8 References.

Appendix A: Place the title of appendix here

List of Figures

1. Different Activity Related Sensor Data

2. Model Summary

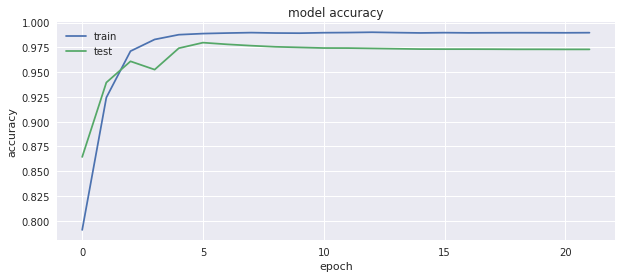

3. Model Accuracy

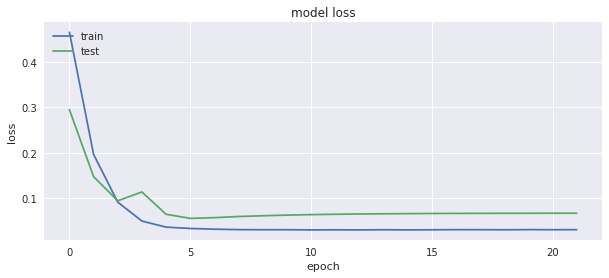

4. Model loss

1 Introduction

Artificial Neural Network with a validation accuracy of 97.98 % and a precision of 95% was achieved from the data to learn (as a cellphone attached on the waist) to recognize the type of activity that the user is doing. My work is inspired by Guillaume-chevalier/LSTM-Human-Activity-Recognition but he used RNN-LSTM to recognize the activity whereas I used ANN for the same. And had achieved a better confusion matrix as well as the validation accuracy than the RNN-LSTM. Bidirectional LSTM on the other hand gave around 94 % but which is still less. The above VALIDATION ACCURACY is also best on KAGGLE. The approach might be a little different.

2 Background

There are much research has been done over the years. I review some of them for learning the background of my task.

Paniagua et al. describe different sensors like accelerometer, magnetic field, and air pressure meter equipped by mobile, which can help in the operation of the evoking context of the user like location, situation, etc. Moreover, processing the evoked sensor data is generally a resource-hungry task, which can be uploaded to the public cloud storage from mobile devices. This paper specifically targets at evoking useful information from the accelerometer sensor data. The paper defines the usages of parallel computing using Map Reduce on the cloud for training and recognizing human activities based on machine learning classifiers that can scale in performance and accuracy easily. The sensor data is collected from the mobile devices, uploaded to the cloud and processed using different classification algorithms such as Iterative Dichotomizer 3, Naive Bayes Classifier, and K-Nearest-Neighbors. The guessed activities can be used in mobile applications like this paper model Zompopo that utilizes the data in creating an intelligent calendar [1].

On the other hand, human exercises are inalienably interpretation invariant and various levels. Human action acknowledgment (HAR), a field that has earned a great deal of consideration as of late because of its appeal in different application spaces, utilizes time-arrangement sensor information to construe exercises. In this paper, a profound convolutional neural system (convnet) is proposed to perform productive and powerful HAR utilizing cell phone sensors by abusing the innate attributes of exercises and 1D time-arrangement signals, simultaneously giving an approach to naturally and information adaptively remove vigorous highlights from crude information. Analyses show that convnets to sure determine applicable and progressively complex highlights with each extra layer, even though the distinction of highlight multifaceted nature level abatements with each extra layer.

Moreover, A more extensive time length of transient nearby connection can be abused (1 × 9-1 × 14) and a low pooling size (1 × 2-1 × 3) is demonstrated to be useful. Convnets likewise accomplished a practically ideal order on moving exercises, particularly fundamentally the same as ones that were recently seen to be extremely hard to characterize. In conclusion, convnets beat other best in class information-digging strategies in HAR for the benchmark dataset gathered from 30 volunteer subjects, accomplishing a general presentation of 94.79% on the test set with crude sensor information, and 95.75% with extra data of worldly quick Fourier change of the HAR informational index [2].

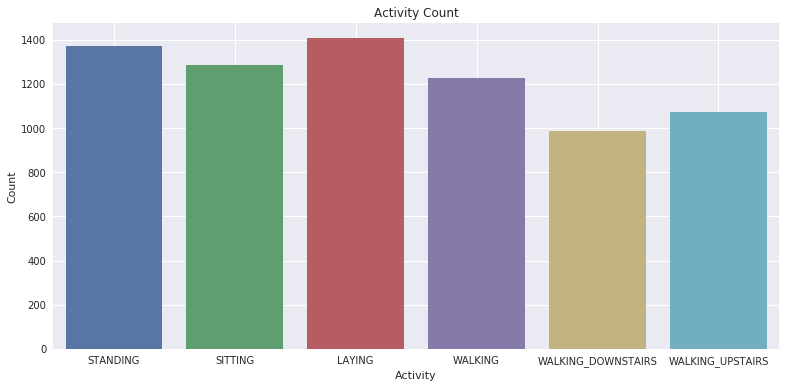

3 Dataset

The mobile sensor signals such as accelerometer and gyroscope were pre-processed by using noise filters and then crated samples in fixed-width sliding windows of 2.56 sec and 50% overlap. The mobile sensor acceleration signal having gravitational and body motion components were separated using a Butterworth low-pass filter into body acceleration and gravity. The G-force is adopted to have only low-frequency components, whereas a filter with 0.3 Hz cutoff frequency has been used. From each window, a vector of features was obtained by computing variables from the time and frequency domain [3].

Figure 1: Different Activity Related Sensor Data

View More Technical Report Sample on ANN or Software Engineering Here!

4 Methodology

For feature selection, “extra tree classifier has been used with and I1 selection, but the results were somewhat better with all features only when manually tune the hyperparameters of the model to its almost utmost level which took some time. Though having fewer features will also take less time to train, but in this case, manual selection of features regarding circumstance can’t be done and the other techniques” [4].

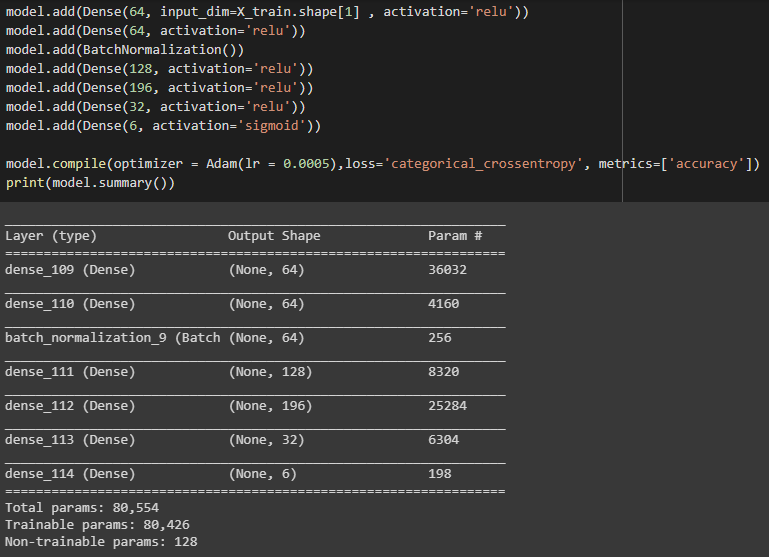

The model design is as well as could be expected and concocted after continued tuning and changes in network architecture. By taking exceptional consideration of learning rate and clump size to which the model is touchy and need to overcharge them, to get a standout amongst another outcome in front. Here is the model summary:

Figure 2: Model Summary

In this sequential model 1st layer is a dense layer having an activation layer as Rectified Linear Unit (Relu). After the 2nd layer, batch normalization is used. The final layer uses sigmoid as activation fiction as the loss needs to be minimized. Adam optimizer has been used. In particular, some of the layers I have changed to see the changes and add other layers as well. The layer has in total of 80k+ parameters.

View More technical report sample on ANN or Other Course Here!

5 Results and discussion

I have tune hyperparameters such as epoch, batch size, etc. Batch size = 256 and epoch = 22 has been given as it is achieving good accuracy.

Figure 3: Model accuracy

This curve shows that both the train and test accuracy is good. After 22 epoch, the loss is .03, accuracy is 98.9%, validation loss is 0.06, and validation accuracy is 97.3% which is a great result indeed.

Figure 4: Model loss

The pictures show there is no over-fitting. The model is efficient in results.

6 Conclusions and recommendations

Mobile Sensor data can play a revolutionary role, additionally, with the neural network, it can be helpful to detach any unexpected activity. Alike the apple smartwatch is the best example of a device. Its model is also trained using this type of sequence learning. Though my tuning does not any special effects on accurse and loss, I have learned all those practices. By tuning the hyperparameters, the model’s validation accuracy can be increased from 97.98% to 99% and precision can be increased from 95% to 97%.

7 Acknowledgments on Technical Report Sample on ANN

The main implementation of codes collected from Kaggle.com and the dataset is collected from UCI machinery. I owe nothing, I have just experimented and try to implement a sequence learning model such as LSTM.

8 References

| [1] | H. F. S. N. S. Carlos Paniagua∗, “Mobile Sensor Data Classification for Human Activity Recognition,” Elsevier, The 9th International Conference on Mobile Web Information Systems (MobiWIS 2012), p. 585 – 592, 2012. |

| [2] | S.-B. C. Charissa Ann Ronao, “Human activity recognition with smartphone sensors using deep learning neural networks,” Expert Systems with Applications, p. 59, 2016. |

| [3] | A. G. L. O. X. P. a. J. L. R.-O. Davide Anguita, “Human Activity Recognition Using Smartphones Data Set,” 21th European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning, ESANN 2013., Bruges, Belgium 24-26 April 2013. . |

| [4] | deadskull7, “Human-Activity-Recognition-with-Neural-Network-using-Gyroscopic-and-Accelerometer-variables,” GitHub, [Online]. Available: https://github.com/deadskull7/Human-Activity-Recognition-with-Neural-Network-using-Gyroscopic-and-Accelerometer-variables. |

Appendix A: Technical Report Sample

This is a technical report sample on ANN. Thank You for Reading!